.js-id-AI-Computing-Cyberinfrastructure

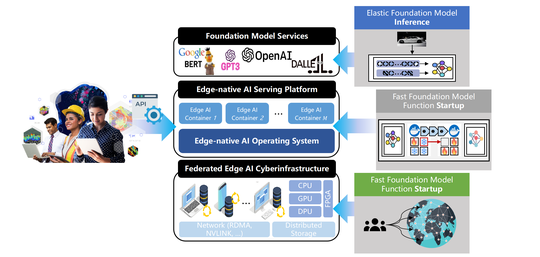

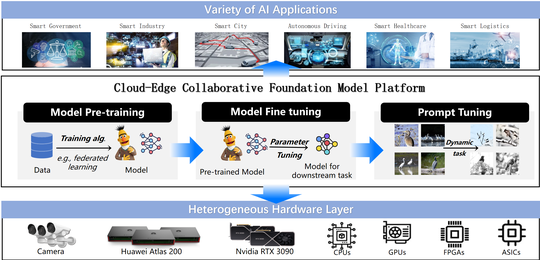

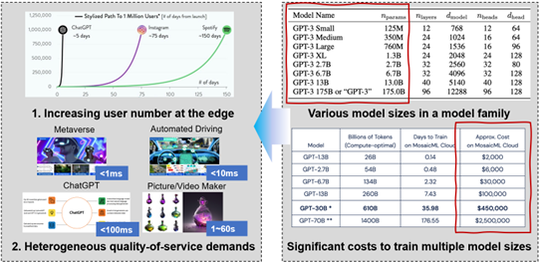

The unprecedented impact of foundation model technology, represented by ChatGPT, is driving a revolutionary paradigm shift in AI, bringing new opportunities and challenges to many industries. However, the high training, inference and maintenance costs of foundation model technologies limit their widespread adoption.

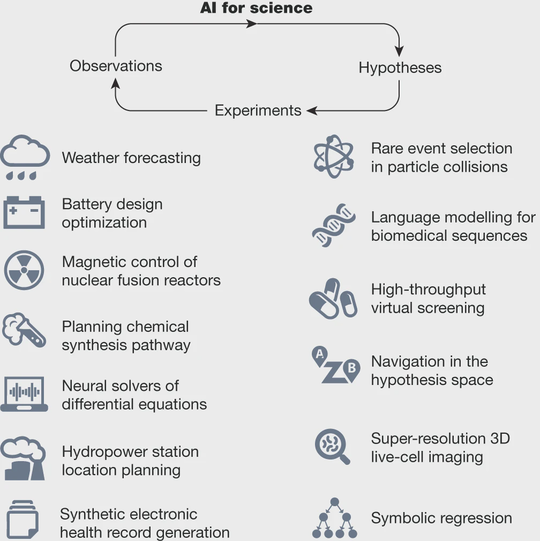

AI for Science (AI4Science) is an emerging field that explores the intersection of artificial intelligence (AI) and scientific research. It leverages the power of AI techniques and algorithms to analyze vast amounts of scientific data, accelerate discovery, and enhance our understanding of complex scientific phenomena.

In pursuit of building open, intelligent, and efficient AI large models, we aim to address the challenges posed by diverse data and resources distributed across edge devices, which can significantly impact the performance and scalability of large models.

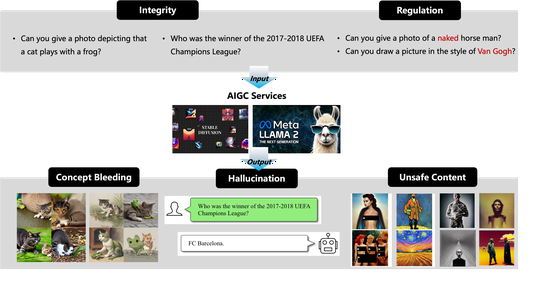

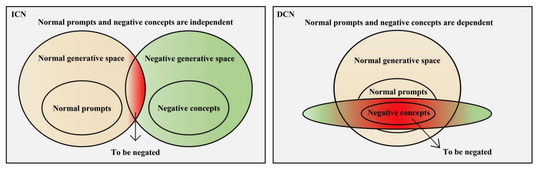

The swift advancement of AI-generated content (AIGC) has empowered users to create photorealistic images and engage in meaningful dialogues with foundation models. Despite these advancements, AIGC services face challenges, including concept bleeding, hallucinations, and unsafe content generation.

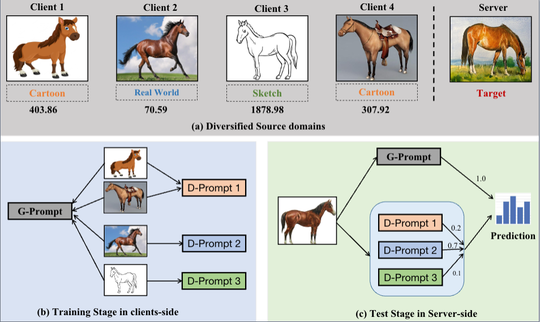

Federated learning (FL) has emerged as a powerful paradigm for learning from decentralized data, and federated domain generalization further considers the test dataset (target domain) is absent from the decentralized training data (source domains).

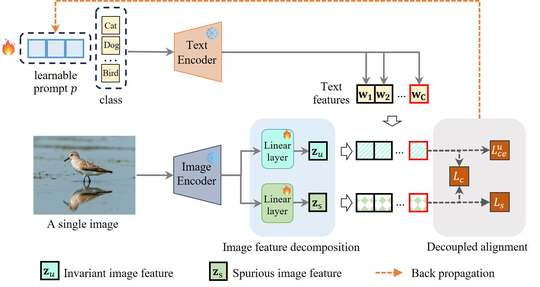

Fine-tuning the learnable prompt for a pre-trained vision-language model (VLM), such as CLIP, has demonstrated exceptional efficiency in adapting to a broad range of downstream tasks. Existing prompt tuning methods for VLMs do not distinguish spurious features introduced by biased training data from invariant features, and employ a uniform alignment process when adapting to unseen target domains.

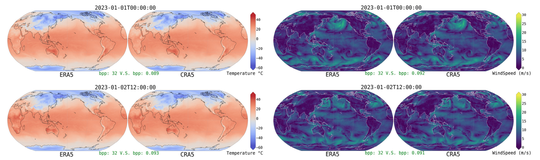

The paper introduces CRA5, a project that uses the Variational Autoencoder Transformer (VAEformer) to compress the ERA5 climate dataset from 226TB to just 0.7TB, achieving a compression ratio of over 300 times.

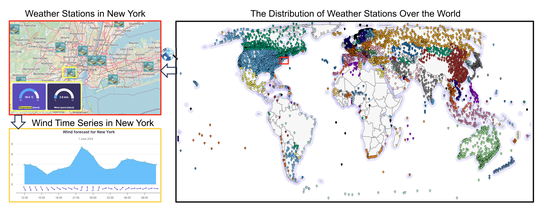

The paper presents the WEATHER-5K dataset, a comprehensive global weather station dataset designed to advance time-series weather forecasting benchmarks. WEATHER-5K includes data from 5,672 weather stations worldwide, covering a 10-year period with hourly intervals.

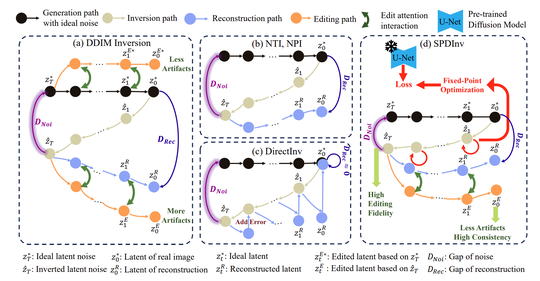

(ECCV2024) Source Prompt Disentangled Inversion for Boosting Image Editability with Diffusion Models

The paper presents a novel method called Source Prompt Disentangled Inversion (SPDInv) to enhance image editability using diffusion models. Traditional approaches often struggle because the inverted latent noise code is closely tied to the source prompt, hindering effective editing with target prompts.

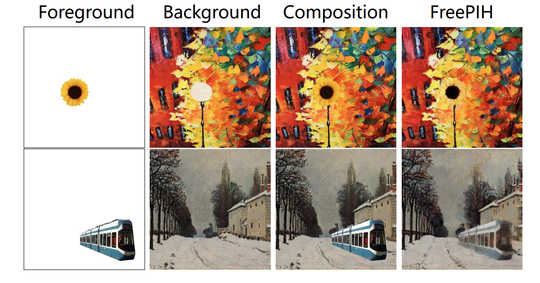

The paper introduces FreePIH, a novel method for painterly image harmonization using a pre-trained diffusion model without additional training. Unlike traditional methods that require fine-tuning or auxiliary networks, FreePIH leverages the denoising process as a plug-in module to transfer the style between the foreground and background images.

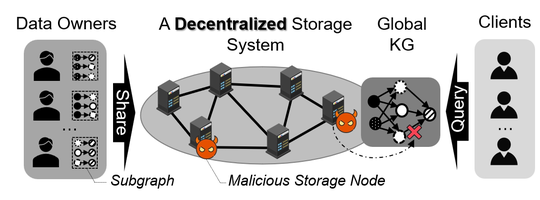

The ability to decentralize knowledge graphs (KG) is important to exploit the full potential of the Semantic Web and realize the Web 3.0 vision. However, decentralization also renders KGs more prone to attacks with adverse effects on data integrity and query verifiability.

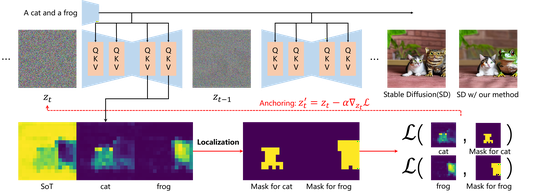

When generating multi-entity scenes, stable diffusion and its derivative models frequently encounter issues of entity overlap or fusion, primarily due to cross-attention leakage. To mitigate these challenges, we propose performing differentiation and binarization on cross-attention maps to accurately locate entities within non-overlapping areas.

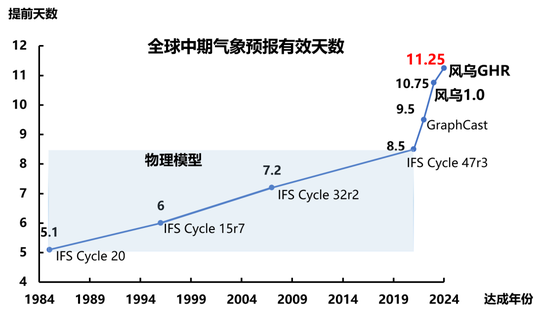

FengWu-GHR: Learning the Kilometer-scale Medium-range Global Weather Forecasting The first data-driven global weather forecasting model running at the 0.09◦ horizontal resolution. FengWu-GHR introduces a novel approach that opens the door for operating ML-based high-resolution forecasts by inheriting prior knowledge from a pretrained low-resolution model.

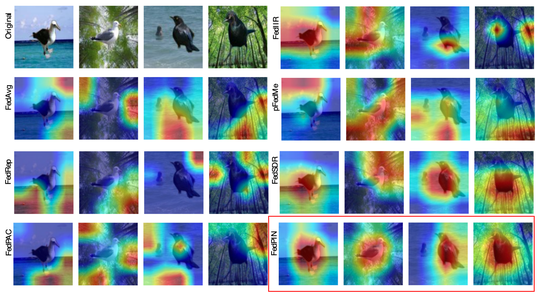

This paper introduces a novel method called FedPIN (Personalized Invariant Federated Learning with Shortcut-Averse Information-Theoretic Regularization) to address the out-of-distribution (OOD) generalization problem in personalized federated learning (PFL). By leveraging causal models and information-theoretic constraints, this approach aims to extract personalized invariant features while avoiding the pitfalls of spurious correlations.

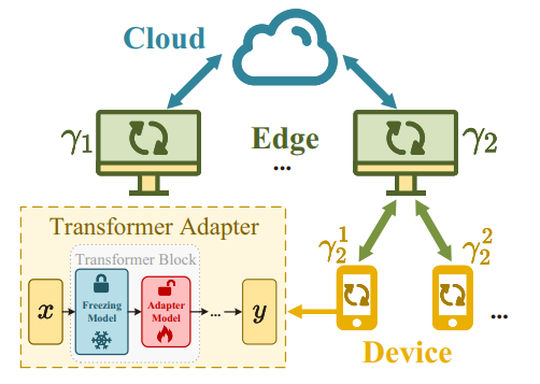

With the rapid advancement of giant models, the paradigm of pre-training models followed by fine-tuning for specific downstream tasks has become increasingly popular. In response to the challenges faced by adapter-based fine-tuning due to insufficient data, and the scalability and inflexibility issues of existing federated fine-tuning solutions, we introduce Tomtit.

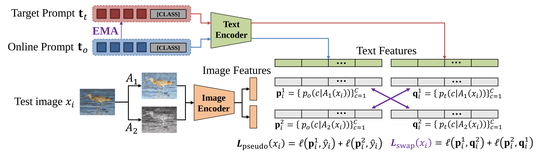

In this paper, we propose SwapPrompt, a novel framework that can effectively leverage the self-supervised contrastive learning to facilitate the test-time prompt adaptation. SwapPrompt employs a dual prompts paradigm, i.e., an online prompt and a target prompt that averaged from the online prompt to retain historical information.

PROTORE works by incorporating CLIP’s language-contrastive knowledge to identify the prototype of negative concepts, extract the negative features from outputs using the prototype as a prompt, and further refine the attention maps by retrieving negative features.

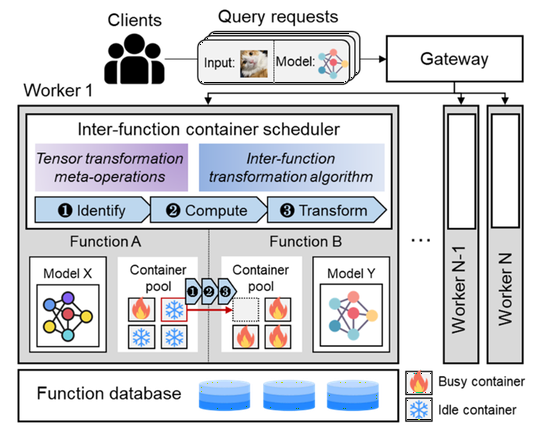

Serverless ML inference is an emerging cloud computing paradigm for low-cost, easy-to-manage inference services. In serverless ML inference, each call is executed in a container; however, the cold start of containers results in long inference delays.

Transformer model empowered architectures have become a pillar of cloud services that keeps reshaping our society. However, the dynamic query loads and heterogeneous user requirements severely challenge current transformer serving systems, which rely on pre-training multiple variants of a foundation model to accommodate varying service demands.

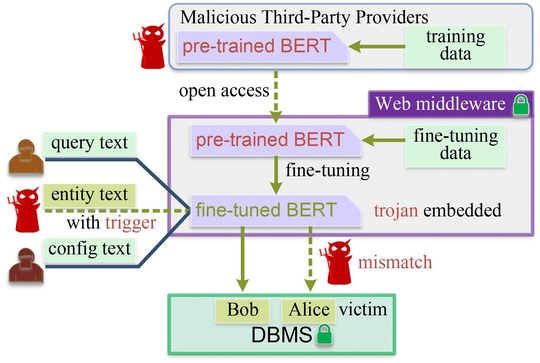

The recent success of pre-trained language models (PLMs) such as BERT has resulted in the development of various beneficial database middlewares, including natural language query interfaces and entity matching. This shift has been greatly facilitated by the extensive external knowledge of PLMs.

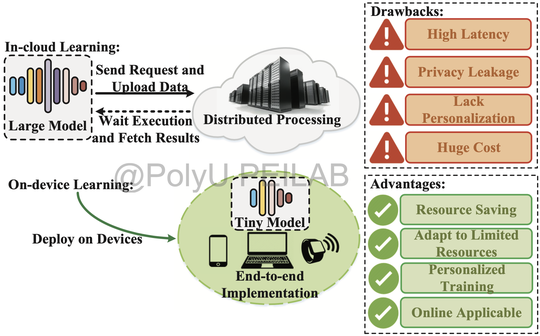

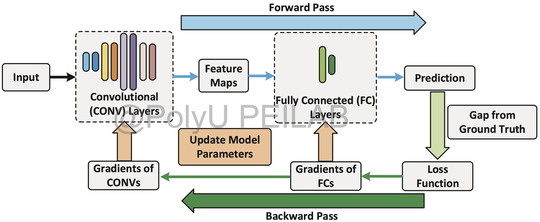

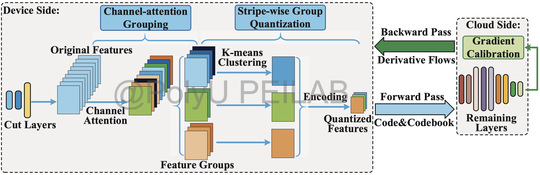

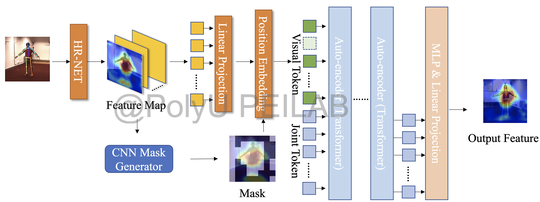

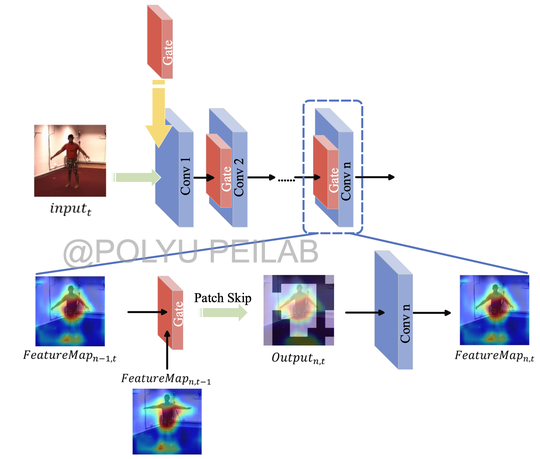

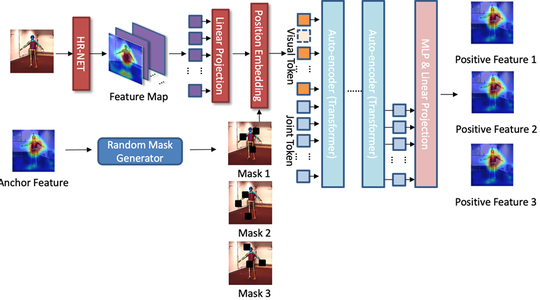

Our research focuses on the software and hardware synergy of on-device learning techniques, covering the scope of model-level neural network design, algorithm-level training optimization and hardware-level arithmetic acceleration.

Our research focuses on the software and hardware synergy of on-device learning techniques, covering the scope of model-level neural network design, algorithm-level training optimization and hardware-level arithmetic acceleration.

Our research focuses on the software and hardware synergy of on-device learning techniques, covering the scope of model-level neural network design, algorithm-level training optimization and hardware-level arithmetic acceleration.

Our research focuses on the software and hardware synergy of on-device learning techniques, covering the scope of model-level neural network design, algorithm-level training optimization and hardware-level arithmetic acceleration.

Our research focuses on the software and hardware synergy of on-device learning techniques, covering the scope of model-level neural network design, algorithm-level training optimization and hardware-level arithmetic acceleration.

Our research focuses on the software and hardware synergy of on-device learning techniques, covering the scope of model-level neural network design, algorithm-level training optimization and hardware-level arithmetic acceleration.

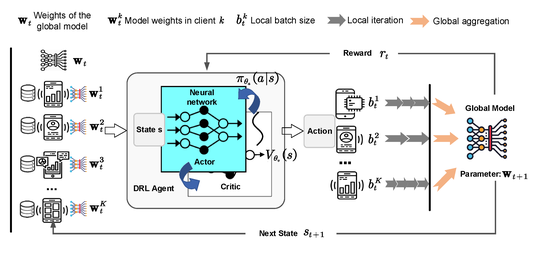

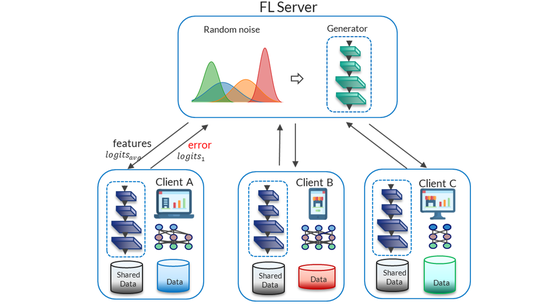

Federated learning (FL) has been proposed as a promising solution for future AI applications with strong privacy protection. It enables distributed computing nodes to collaboratively train models without exposing their own data.

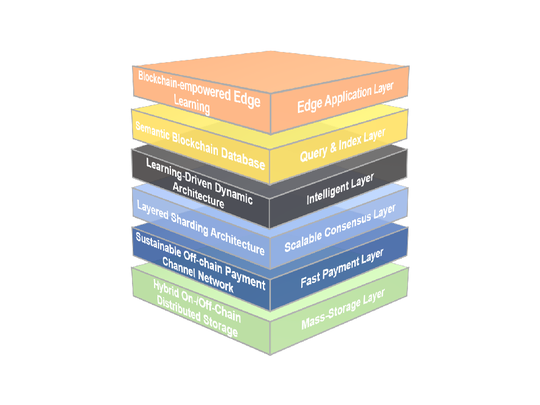

Our team aims at the next-generation blockchain system with scalability, security, privacy, and intelligence and our proposed architecture is composed of 6 layers as above. In the following, the details of these 6 layers will be explained from top to bottom.

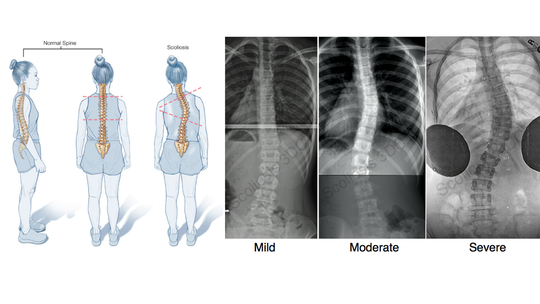

Scoliosis is a sideways curvature of the spine that occurs most often during thegrowth spurt just before puberty. According to the survey and statistics of China Child Development Center, more than 20% teens have scoliosis.

To tackle non-IID data challenge in FL, we consider to design a new method to improve training efficiency of each client from the perspective of whole training process.

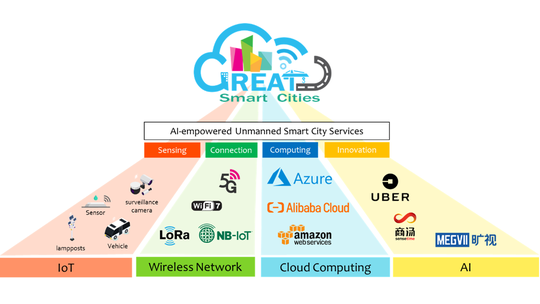

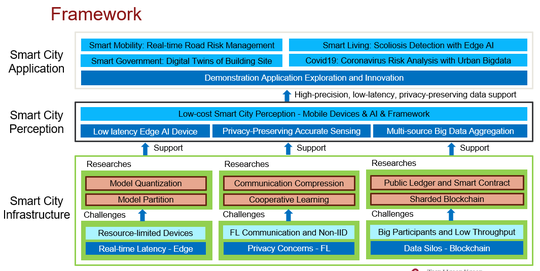

Research Overview Our team aims to design promising solutions for future AI applications based on edge intelligent technologies, which can empower construction, public health, environment, transportation and other industries, and promote the upgrading of urban intelligence.

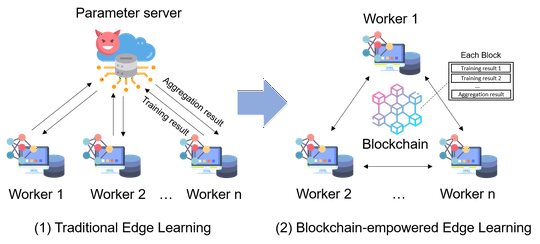

Blockchain-empowered edge learning is a novel distributed learning architecture to dispense with a dedicated server in traditional distributed learning and provide trustworthy training for edge devices.

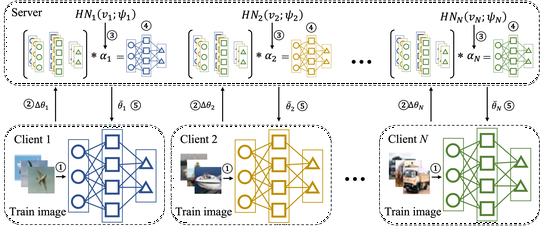

We design a novel pFL training framework dubbed Layer-wised Personalized Federated learning (pFedLA) that can discern the importance of each layer from different clients, and thus is able to optimize the personalized model aggregation for clients with heterogeneous data.

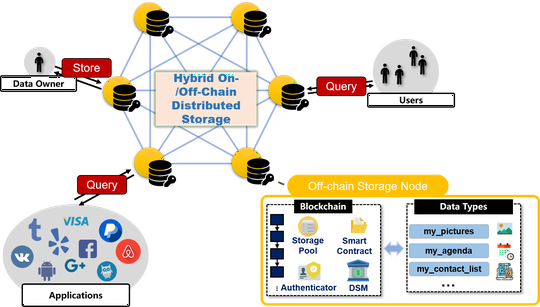

Blockchain database is a new direction that constructs index on top of blockchain to provide rich query functionalities. The existing works are either insecure because the query process separates from the blockchain consensus, or inscalable because all the data needs to be stored in the block. Therefore, we propose an authenticated semantic database layer for blockchains.

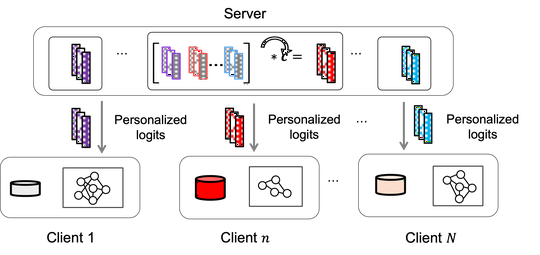

Most existing pFL methods rely on model parameters aggregation at the server side, which require all models to have the same structure and size. Such constraints would prevent status quo pFL methods from further application in practical scenarios, where clients are often willing to own unique models, i.e., with customized neural architectures to adapt to heterogeneous capacities in computation, communication and storage space, etc. We seek to develop a novel training framework that can accommodate heterogeneous model structures for each client and achieve personalized knowledge transfer in each FL training round.

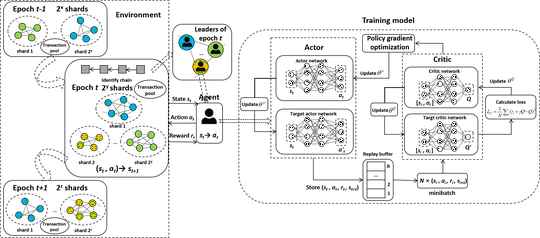

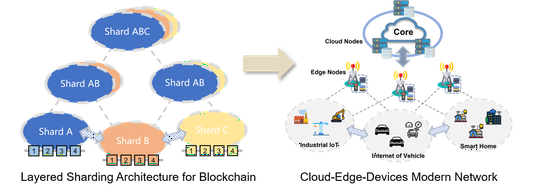

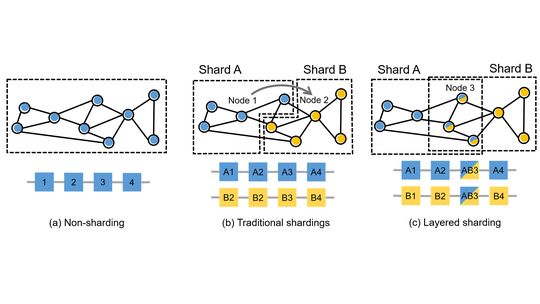

Most existing blockchain systems adopt a static policy that cannot efciently deal with the dynamic environment in the blockchain system, i.e., joining and leaving of nodes, and malicious attack. Therefore, we propose a novel dynamic sharding-based blockchain framework to achieve a good balance between performance and security without compromising scalability under a dynamic environment.

A few of works have designed incentive mechanisms for FL, but these mechanisms only consider myopia optimization on resource consumption, which results in the lack of learning algorithm performance guarantee and long-term sustainability. We propose Chiron, an incentive-driven long-term mechanism for edge learning based on hierarchical deep reinforcement learning.

Most existing blockchain systems adopt a static policy that cannot efciently deal with the dynamic environment in the blockchain system, i.e., joining and leaving of nodes, and malicious attack. Therefore, we propose a novel dynamic sharding-based blockchain framework to achieve a good balance between performance and security without compromising scalability under a dynamic environment.

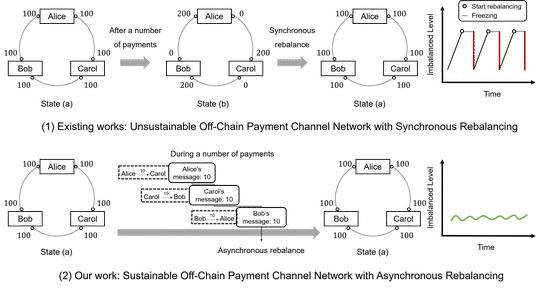

Payment channel network (PCN) is the most promising off-chain technologies to support massive micro payments for blockchain. The technology has been deployed in a number of blockchains including Bitcoin and Ethereum.

Personal data produced from widely emerged cyberspace activities are expected to promote information dissemination and engagement, or even make business intelligence more powerful.

Physiotherapeutic scoliosis-specific exercises (PSSE) have been proved to be effective in scoliosis rehabilitation. PSSE consist of a program of curve-specific exercise protocols which are individually adapted to a patients’ curve site, magnitude, and clinical characteristics.

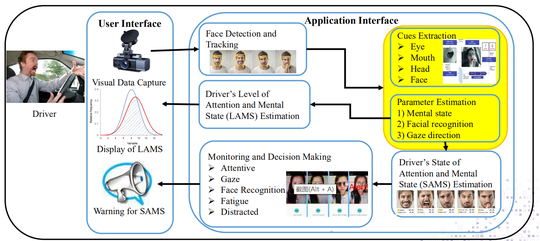

Video-based abnormal driving behavior and mental detection is becoming more and more popular. The key goal is to ensure the safety of drivers and passengers in the vehicle, and it is an essential step to realize autonomous driving at this stage.

Blockchain draws tremendous attention from academia and industry, since it can provide distributed ledgers with data transparency, integrity, and immutability to untrusted parties for various decentralized applications.

With the emergence and drastic improvement of mobile devices (e.g., phones, tablets, drones, and autonomous vehicles), we are now witnessing an exciting revolution of the digital city.

Federated learning (FL) has been proposed as a promising solution for future AI applications with strong privacy protection. It enables distributed computing nodes to collaboratively train models without exposing their own data.