Introduction of "AI Computing Cyberinfrastructure"

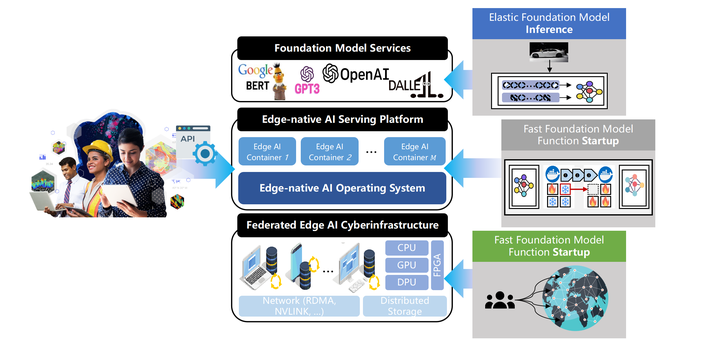

The unprecedented impact of foundation model technology, represented by ChatGPT, is driving a revolutionary paradigm shift in AI, bringing new opportunities and challenges to many industries. However, the high training, inference and maintenance costs of foundation model technologies limit their widespread adoption. Considering that edge computing power (e.g., edge servers, personal PCs, etc.), which accounts for about 90% of the network, has not yet been effectively used, we focus on a new federated edge AI cyberinfrastructure, and leverages the natural advantages of edge computing power in terms of cost, latency, and privacy, so as to efficiently aggregate the distributed, heterogeneous, and multi-party computing power and become an important computing power supplier in the computing power network, and promote the foundation model technology-based intelligent upgrading of various industries. And the infrastructure could be divided into several modules as follows:

- Elastic Foundation Model Inference: Elastic Foundation Model Inference is a dynamic approach designed to optimize the deployment and execution of foundation models. It leverages elastic computing resources to scale up or down based on the current demand and computational requirements. This flexibility ensures that FMs can handle varying workloads efficiently, providing robust performance across different tasks. Details

- Fast Foundation Model Function Startup: Fast Foundation Model Function Startup refers to the rapid initialization and deployment of foundational AI models to ensure minimal latency and quick responsiveness in various applications. This approach is crucial for real-time applications and scenarios where time efficiency is paramount. Details