(ICML2024) Causally Motivated Personalized Federated Invariant Learning with Shortcut-Free Information-Theoretic Regularization

This paper introduces a novel method called FedPIN (Personalized Invariant Federated Learning with Shortcut-Averse Information-Theoretic Regularization) to address the out-of-distribution (OOD) generalization problem in personalized federated learning (PFL). By leveraging causal models and information-theoretic constraints, this approach aims to extract personalized invariant features while avoiding the pitfalls of spurious correlations. The key contribution of the paper is the introduction of a causal signature, which distinguishes personalized features from spurious ones using global invariant features as a reference. This causal signature is quantified as an information-theoretic constraint, facilitating shortcut-averse personalized invariant learning on each client.

Key Contributions and Findings

- Heterogeneous Structured Causal Model (SCM): The paper formulates an SCM to interpret Non-IID data distributions across federated clients. It proposes a causal signature that distinguishes between personalized and spurious features using global invariant features as an anchor. This signature is quantified as an information-theoretic constraint to achieve personalized invariant learning on each client.

- Theoretical Analysis and Algorithm Design: The authors theoretically demonstrate that FedPIN can develop the optimal personalized invariant predictor for each client and provide a tighter generalization error bound compared to existing PFL methods under train-test distribution shifts. Additionally, they prove that FedPIN achieves a convergence rate comparable to the classic FedAvg algorithm.

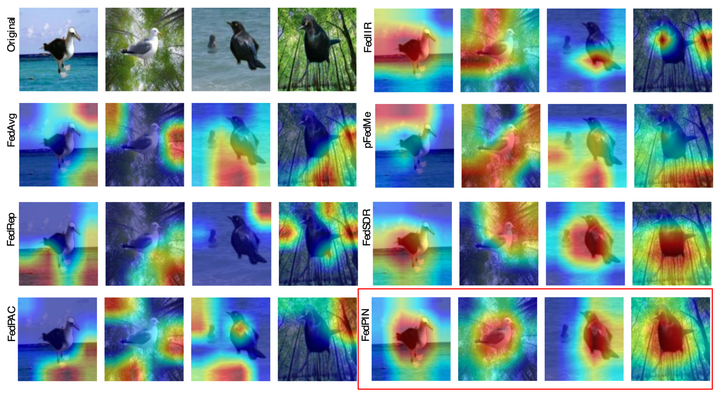

- Experimental Validation: Extensive experiments on various datasets validate the superiority of FedPIN in OOD generalization performance compared to state-of-the-art FL and PFL methods.

Related Work

The paper also reviews related work in invariant learning and heterogeneous federated learning. Invariant learning aims to solve the OOD generalization problem by leveraging invariant features, while heterogeneous federated learning focuses on training either a shared global model or personalized models on Non-IID data.

Conclusion

By introducing causal signatures and information-theoretic constraints, FedPIN successfully balances personalization and generalization, offering a novel solution to the OOD generalization problem in personalized federated learning.

More information refer to Causally Motivated Personalized Federated Invariant Learning with Shortcut-Free Information-Theoretic Regularization, ICML 2024.